Browsing the Glasgow gangila plots on Saturday night I noticed a very weird situation, where the load was going over the number of job slots and an increasing amount of CPU was being consumed by the system.

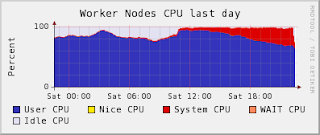

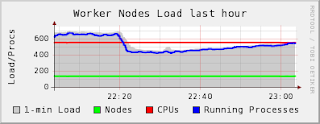

Browsing the Glasgow gangila plots on Saturday night I noticed a very weird situation, where the load was going over the number of job slots and an increasing amount of CPU was being consumed by the system.It took a while to work out what was going on, but I eventually tracked it down to /tmp on certain worker nodes getting full - there was an out of control athena.log file in one ATLAS user's jobs which was reaching >50GB. Once /tmp was full it crippled the worker node and other jobs could not start properly - atlasprd jobs untarring into /tmp stalled and the system CPU went through the roof.

It was a serious problem to recover from this - it required the offending user's jobs to be canceled, and a script to be written which cleared out the /tmp space. After that the stalled jobs also had to be qdeled, because they could not recover.

This did work - the load comes back down under the red line and then fills back up as working jobs come in as can be seen from the ganglia plots.

This clearout was done between 2230 and 2400 in a Saturday night, which royally p***ed me off - but I knew that if I left it until Monday the whole site would be crippled.

I raised a GGUS ticket against the offending user. Naturally there wasn't a response until Monday, however it did prove that it is possible to contact a VO user through GGUS.

Lessons to learn: we clearly need to monitor disk on the worker nodes, both /home and /tmp. The natural route to do this is is through MonAMI, with trends monitored in ganglia and alarms in nagios. Of course, we need to get nagios working again on svr031 - the president's brain will be re-inserted next week! In addition, perhaps we want at least a group quota on /tmp, so that VOs can kill themselves but not other users.

No comments:

Post a Comment