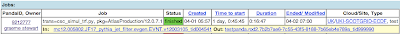

Finally we are green for the latest Atlas releases...

We've made a lot of progress this past week with ECDF. It all started on Friday 10th Oct when were trying to solve some Atlas installation problems in a somewhat ad hoc fashion.

We then incorrectly tagged/published having a valid production release. This then caused serious problems with the Atlas jobs, which resulted in us being taken out of the UK production and missing out on a lot of CPU demand. This past week we've been working hard to solve the problem and here are a few things we found:

1) First of all there were a few access problems to the servers for a few of us. So it was hard to see what was actually going on with the mounted atlas software area. Some of this has now been resolved.

2) The installer was taking ages and them timing (proxy and also SGE killing it off eventually). strace on the nodes linked this to a very slow performance while doing many chmod write to the file system. We solved this in a two fold approach

- Alessandro modified the installer script to be more selective regarding which files needs chmoding, but the system was still very slow.

- The nfs export was then changed to allow asynchronous write which helped speed up the tiny writes to the underlying LUN considerably. There is a worry now of possible data corruption, so should be borne in mind if the server goes down and/or we have edinburgh specific segv/problems with a release. Orlando may want to post later information about the nfs changes.

3) The remover and installer used ~ 3GB and 4,5 GB of vmem respectively and the 6GB vmem limit had only been applied to prodatlas jobs. The 3GB vmem default started causing serious problems for sgmatlas. This has now been changed to 6GB.

We're also planning in the ce to add "qsub -m a -M" SGE options to allow the middleware team to monitor better the occurence of vmem aborts. We also might add a flag to help better parse the SGE account logs for apel. Note: the APEL monitoring problem has been fixed. However, that's for another post (Sam?)...

Well done to Orlando, Alessandro, Graeme and Sam for helping us get to the bottom of this!

Showing posts with label UKI-SCOTGRID-ECDF. Show all posts

Showing posts with label UKI-SCOTGRID-ECDF. Show all posts

Saturday, October 18, 2008

Monday, August 25, 2008

SAM Failures across scotgrid: Someone else's problem

All 3 scotgrid sites have just failed the atlas SAM SE tests (atlas_cr, atlas_cp, atlas_del) as have quite alot of the rest of the UKI-* sites.

Once again this isn't a Tier-2 issue but an upstream problem with the tests themselves

ATLAS specific test launched from monb003.cern.ch

Checking if a file can be copied and registered to svr018.gla.scotgrid.ac.uk

Once again this isn't a Tier-2 issue but an upstream problem with the tests themselves

ATLAS specific test launched from monb003.cern.ch

Checking if a file can be copied and registered to svr018.gla.scotgrid.ac.uk

------------------------- NEW ----------------

srm://svr018.gla.scotgrid.ac.uk/dpm/gla.scotgrid.ac.uk/home/atlas/

+ lcg-cr -v --vo atlas file:/home/samatlas/.same/SE/testFile.txt -l lfn:SE-lcg-cr-svr018.gla.scotgrid.ac.uk-1219649438 -d srm://svr018.gla.scotgrid.ac.uk/dpm/gla.scotgrid.ac.uk/home/atlas/SAM/SE-lcg-cr-svr018.gla.scotgrid.ac.uk-1219649438

Using grid catalog type: lfc

Using grid catalog : lfc0448.gridpp.rl.ac.uk

Using LFN : /grid/atlas/dq2/SAM/SE-lcg-cr-svr018.gla.scotgrid.ac.uk-1219649438

[BDII] sam-bdii.cern.ch:2170: Can't contact LDAP server

lcg_cr: Host is down

+ out_error=1

+ set +x

-------------------- Other endpoint same host -----------

Labels:

ATLAS,

monitoring,

nagios,

SAM,

UKI-SCOTGRID-DURHAM,

UKI-SCOTGRID-ECDF,

UKI-SCOTGRID-GLASGOW

Friday, June 13, 2008

Problems up north

We have two major problems in ScotGrid right now:

ECDF: Have been failing SAM tests for over a week now. The symptom is that the SAM test is submitted successfully, runs correctly on the worker node, but then job outputs never seem to get back to the WMS, so eventually the job is timed out as a JS failure. As usual we cannot reproduce the problem with dteam or ATLAS jobs (in fact ATLAS condor jobs are running fine) so we are hugely puzzled. Launching a maual SAM test throught the CIC portal doesn't help because the test gets into the same state and hangs for 6 hours - so you cannot submit another one. Sam has asked for more network ports to be opened to have a larger globus port range, but the network people in Edinburgh seem to be really slow in doing this (and it seems it is not the root cause anyway).

Durham: Have suffered a serious pair of problems on their two SE hosts. The RAID filasystem on the headnode (gallows) was lost last week and all the data is gone. Then this week the large se01 disk server suffered an LVM problem and we can no longer mount grid home areas or access data on the SRM. Unfortunately Phil is on holiday, David is now off sick and I will be away on Monday - hopefully we can cobble something together to get the site running on Tuesday.

Thankfully, dear old Glasgow T2 is running like a charm right now (minor info publishing and WMS problems aside). In fact our SAM status for the last month is 100%, head to head with the T1! Fingers crossed we keep it up.

ECDF: Have been failing SAM tests for over a week now. The symptom is that the SAM test is submitted successfully, runs correctly on the worker node, but then job outputs never seem to get back to the WMS, so eventually the job is timed out as a JS failure. As usual we cannot reproduce the problem with dteam or ATLAS jobs (in fact ATLAS condor jobs are running fine) so we are hugely puzzled. Launching a maual SAM test throught the CIC portal doesn't help because the test gets into the same state and hangs for 6 hours - so you cannot submit another one. Sam has asked for more network ports to be opened to have a larger globus port range, but the network people in Edinburgh seem to be really slow in doing this (and it seems it is not the root cause anyway).

Durham: Have suffered a serious pair of problems on their two SE hosts. The RAID filasystem on the headnode (gallows) was lost last week and all the data is gone. Then this week the large se01 disk server suffered an LVM problem and we can no longer mount grid home areas or access data on the SRM. Unfortunately Phil is on holiday, David is now off sick and I will be away on Monday - hopefully we can cobble something together to get the site running on Tuesday.

Thankfully, dear old Glasgow T2 is running like a charm right now (minor info publishing and WMS problems aside). In fact our SAM status for the last month is 100%, head to head with the T1! Fingers crossed we keep it up.

Labels:

CE,

SAM,

Storage,

UKI-SCOTGRID-DURHAM,

UKI-SCOTGRID-ECDF

Monday, May 05, 2008

ECDF down for the moment

ECDF have been having real trouble with GPFS in the last week, which gave us some miserable results (23% pass rate on SAM, c.f., UK average of 75%). For the moment the systems team have suspended job submission and the site went into downtime on Friday.

This may or may not be related to the problems we see with the globus job wrapper code on ECDF, where the GPFS daemon consumes up to 300% CPU due to a strange file access pattern in the job home directory. Sam is working on installing an SL4 CE (based on the GT4 code) to see if this improves matters.

This may or may not be related to the problems we see with the globus job wrapper code on ECDF, where the GPFS daemon consumes up to 300% CPU due to a strange file access pattern in the job home directory. Sam is working on installing an SL4 CE (based on the GT4 code) to see if this improves matters.

Wednesday, April 02, 2008

ECDF running for ATLAS

ECDF have now passed ATLAS production validation. The last link in the chain was ensuring that their SRMv1 endpoint was enabled on the UK's DQ2 server at CERN - this allows the ATLAS data management infrastructure to move input data to ECDF.

ECDF have now passed ATLAS production validation. The last link in the chain was ensuring that their SRMv1 endpoint was enabled on the UK's DQ2 server at CERN - this allows the ATLAS data management infrastructure to move input data to ECDF.After that problem was corrected this morning the input data was moved from RAL, a production pilot picked up the job and ran it then the output data was moved back to RAL.

I have asked the ATLAS production people to enable ECDF in the production system and I have turned up the pilot rate to pull in more jobs.

We had a problem with the software area not being group writable (for some reason Alessando's account mapping changed), but this has now been corrected and an install of 14.0.0 has been started.

It's wonderful to now have the prospect of running significant amounts of grid work on the ECDF resource. Well done to everyone!

Saturday, January 05, 2008

Happy New Year ScotGrid - now with added ECDF...

Well, we didn't get it quite as a Christmas present, but the combined efforts of the scotgrid team have managed to get ECDF green for New Year.

In the week before Christmas Greig and I went through period of intensive investigation as to why normal jobs would run, but SAM jobs would not. Finding that jobs which fork a lot, like SAM jobs, would fail was the first clue. However, it turned out not to be a fork or process limit, butn a limitation on the virtual memory size which was the problem. SGE can set a VSZ limit on jobs, and the ECDF team have set this to 2GB, which is the amount of memory they have per core. Alas for jobs which fork, virtual memory is a huge over estimate of their actual memory usage (my 100 child python fork job registers ~2.4GB of virtual memory, but uses only 60MB of resident memory). That's a 50 fold over estimate of memory usage!

As SAM jobs to fork a lot, they hit this 2GB limit and are killed by the batch system, leading to the failures we were plagued by.

A work around, suggested by the systems team, was to submit ops jobs to the ngs queue, which is a special short running test queue (15 min wall time) which has no VSZ limit on it.

Greig modified the information system to publish the ngs queue and ops jobs started to be submitted to this queue on the last day before the holidays.

Alas, this was not quite enough to get us running. We didn't find out until after new year that we also needed to place a specify a run time limit of 15 minutes on the jobs and submit them to a non-standard project. The last step required me to hack the job manager in a frightful manner as I really couldn't fathom how the perl (yuk!) job manager was supposed to set the project - in fact even though project methods existed they didn't seem to emit anything into the job script.

Finally, with that hack made this morning, ECDF started to pass SAM tests. A long time a coming, that one.

The final question, however, is what to do about this VSZ limit. The various wrappers and accoutrements which grid jobs bring mean that before a line of user code runs there are about 10 processes running, as 600MB of VSZ has been grabbed. This is proving to be a real problem for local LHCb users, because ganga forks a lot and also gets killed off. Expert opinion is that VSZ limits are just wrong.

We have a meeting with the ECDF team, I hope, in a week, and this will be our hot topic.

Big thanks go to Greig for a lot of hard work on this, as well as Steve Traylen, for getting us on the right track, and Kostas Georgiou, for advice about the perils of VSZ in SGE.

Monday, November 26, 2007

ECDF - nearly there...

Thanks to the efforts of Greig and Sam, ECDF now has storage set up. Not a lot of storage (just 40MB), but it proves the headnode is working and the information system is correctly configured.

This means we are now fully passing SAM tests. Hooray!

Of course, passing SAM tests is only the first step though, and there are 3 outstanding issues which I have discovered using an atlas production certificate:

- I was mapped to a dteam account when I submitted my job (not quite as bad as you think - I am obviously in dteam and this was the default grid-mapfile mapping after LCMAPS had failed).

- There's no 32bit python - this has been passed to Ewan for dealing with (along with the list of other compat 32 RPMs.

- There's no outbound http access. This hobbles a lot of things for both ATLAS and LHCb.

Wednesday, November 14, 2007

First Jobs Run at ECDF

Finally, after several months of anguish, SAM jobs are running at ECDF! Note that for the moment they fail replica management tests (there was little point in putting effort into the DPM while the CE was so broken), but at last we're getting output from SAM jobs coming back correctly.

The root cause of this has been networking arrangements which were preventing the worker nodes from making arbitrary outbound connections. Last week we managed to arrange with the systems team to open all ports >1024, outbound, from the workers. Then it was a matter of battering down each of the router blocks one by one (painfully these seemed to take about 2 days each to disappear).

Testing will now continue, but we're very hopeful that things will now come together quickly.

Friday, September 14, 2007

ECDF for Beginners

Basically, this is proving far more painful than anticipated. Although the MON/LFC box has been configured, the CE is proving seriously problematic. The ECDF team thought that SL3 was not a winner for GPFS, so Sam tried using the gLite 3.0 CE on top of SL4. This didn't work (not unexpectedly). Although we know that the lcg-CE has been built for gLite 3.1, it's not yet even been released to pre-production, so clearly there's nothing we can use for a production site. So Ewan reinstalled the CE with SL3, in order to install the old gLite 3.0 version. However, it then proved to be very difficult to get GPFS working on SL3, so this is still a work in progress. How long it will take to resolve is anyone's guess.

GPFS is necessary for the software area and the pool account home directories. At this point I would just buy a 500GB disk from PC world and run with that for a month while we wait for the gLite 3.1 CE, but we can't do that with machines other people are running.

Getting the site certified for the end of the month now looks challenging.

Hmmm....

GPFS is necessary for the software area and the pool account home directories. At this point I would just buy a 500GB disk from PC world and run with that for a month while we wait for the gLite 3.1 CE, but we can't do that with machines other people are running.

Getting the site certified for the end of the month now looks challenging.

Hmmm....

Subscribe to:

Posts (Atom)