It appeared at that point that the limiting factor on the performance of the DPM was the IOwait on the MySQL server, so we've been looking into ways to reduce that.

Turning on slow query logging showed that there were actually a couple of relatively common queries which were selecting on columns that weren't indexed in their tables, so we decided to try adding indexes to see if that improved matters. (While indexes add a small constant to the time taken to make a write, there's already quite a few implicit indexes on the tables, and writes are much less common than reads.)

The most common slow queries were of the form:

- select MAX(lifetime) from dpm_get_filereq where pfn = 'some pfn here'

and lifetime is not indexed in the dpm_db.dpm_get_filereq table (to be fair, there's no obvious reason why it should be, and the db is generally pretty well indexed on the whole).

- create index pfn_lifetime on dpm_get_filereq (pfn(255), lifetime);

deals with that.

Similarly, for the less frequent lookups for put requests we add:

- create index status_idx on dpm_put_filereq(status);

and

- create index stime_idx on dpm_req(stime);

and, finally, to optimise out the spikes we see each time monami tries to query the server, we add an index to the cns_db:

- create index usage_by_group Cns_file_metadata(gid, filesize);

(this also speeds up the responsiveness of Greig's DPM Monitoring webapp).

In order to do this without locking the request tables for ages, Stuart implemented a slightly hair-raising approach involving cloning the "static", older, parts of the tables, indexing the clone, and then stopping dpm briefly, and syncing the clone with the dynamic parts before switching the (indexed) clone for the (unindexed) original and restarting dpm.

This works surprisingly well - something like 95% of the request tables appear to be historical and static rather than referring to current requests.

(It also raises the question of if it would be easier just to delete the first 80% or so of all the request tables, keeping a suitable backup copy, of course.)

So, after all that, what was the result?

Well.

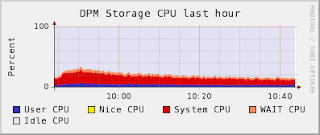

In normal use, the MySQL load is much smoother than before - we've removed pretty much all the load spikes from intensive infrequent queries, and the background load from get requests is roughly halved from previously.

This is visible by comparing the MySQL server loads during HammerCloud test 135 and the most recent test against Glasgow - HC 164:

Unfortunately, within error, it doesn't seem to have actually improved our performance in HammerCloud tests by anything:

which is sad. The iowait still appears (but a bit reduced) when we're under heavy load - the sheer number of reads against the DB is enough to generate this by itself, even with indexes.

It's possible that we could reduce the iowait by increasing the InnoDB Buffer Pool setting for the server - at the moment, we have a 97% hit rate, so increasing that to 99% would cut our iowait by a factor of 3 - but it's not clear that the server is really the bottleneck.

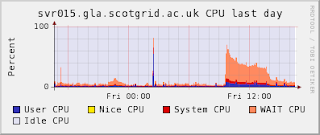

Looking at the other loads:

then it's not clear where the bottleneck is, really - the disks were slightly more stressed (there's a little bit of iowait visible at their peak CPU load), and it looks like something in the network bandwidth topped out at the same time (that peak is suspiciously flat at around 800MB/sec).

Further investigation needed, though!

1 comment:

It's worth pointing out that the index to deal with:

select MAX(lifetime) from dpm_get_filereq where pfn = 'some pfn here'

is actually slightly different from the others. The others turn large scans into small scans over at most a few hundred rows, rather than over the whole table.

The index created:

create index pfn_lifetime on dpm_get_filereq (pfn(255), lifetime);

replaces an existing index on pfn, so it was already a small scan. This is because the query is so common, it's worth further optimistation. The second index turns the small scan into a direct lookup - no scan at all.

The hope was that if the indices are kept in RAM, then we remove disk access totally.

Post a Comment