Upgrade notes:

Basically I'm following

https://www.gridpp.ac.uk:443/wiki/UKI-SCOTGRID-GLASGOW_enabling_VO,

but being also aware that when services are updated YAIM needs rerun.

Preparations

------------

Added new groups for sgm and prd pool accounts - even when these will

not yet be enabled

Modified the poolacct.py script - now much improved and does the new

type of accounts (but can still do the old type!). Also now does

user.conf fragments as well.

Using this, added relevant entries to passwd, group, shadow and

users.conf.

Went through site-info.def again. Added supernemo stanzas and the

vo.d/supernemo.vo.eu-egee.org definitions.

All set!

Disk Servers

------------

Modify update.conf to clear cruft out of system (pruge=true)

Clear and restore yum.repos.d.

yum update

Remove local config_mkgridmap function

Run /opt/glite/yaim/bin/yaim -r -s /opt/glite/yaim/etc/site-info.def

-f config_mkgridmap

Remove MySQL-server, which had bizarrely been installed on some of the

nodes.

Noticed that config_lcgenv is broken for DNS VOs. Submitted ticket

24917, with patch. However, for us this is controlled by cfengine, so

defined appropriate things here: e.g.,

VO_SUPERNEMO_VO_EU_EGEE_ORG_DEFAULT_SE.

Worker Nodes

------------

Changed passwd/group files have already triggered home directory

creation via cfengine. Thus no need to run YAIM.

Ran yum update on WNs (using pdsh). Removed glite-SE_dpm_disk,

DPM-gridftp-server and DPM-rfio-server RPMs - relic of !disk037

(woops). WNs will be blown and rebuilt at transition to SL4 anyway.

MON/Top Level BDII

------------------

Checked svr019 (MON + Top BDII). Nothing to update here. (Top level

BDII was done last week:

http://scotgrid.blogspot.com/2007/07/top-level-bdii-updated-to-glue-13yaim.html)

Site BDII/svr021

----------------

Did yum-update then reran YAIM. Got error

SITE_SUPPORT_EMAIL not set

and

chown: failed to get attributes of `/opt/lcg/var/gip/ldif': No such file or directory

chmod: failed to get attributes of `/opt/lcg/var/gip/ldif': No such file or directory

???

Odd entry has appeared:

GIP file:///opt/lcg/libexec/lcg-info-wrapper

Which is not active on a stand alone site BDII (would work on CE?)

Note that DPM not yet upgraded, so still polling GRIS on svr018 until

this is done.

UI / svr020

-----------

yum update

rerun yaim - discovered that i need to defined supernemo queue to be

snemo in $QUEUES (which is still used). Caused gLite python to throw

an exception (my mistake, but crap code nonetheless...)

Corrected site-info.def and reran YAIM.

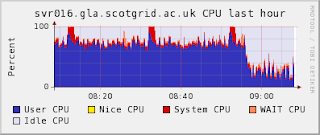

CE / svr016

-----------

Checked list of RPMs to update. Potentially dangerous ones are

vdt_globus_jobmanager_pbs (we have patched pbs job manager).

Seems that there are patches to pbs jobmanager to support DGAS

accounting. I have commented out the cfengine job manager replacement

and will diff and repatch as necessary after configuration.

Created cdf and snemo queues using torque_queue_cfg script (note this

now adds access for sgm and prd groups, even if they are not used).

Ran YAIM.

Immediate sanity check:

GRIS is ok - information system is up.

gatekeeper dead

Help! Restarted.

globus.conf was rewritten, blowing away pbs job manager

re-enabled pbs job manager and restarted

OK, so I moved under the control of

cfengine, but again caveats about running YAIM

apply.

gatekeeper restarted again

Now tailing gatekeeper logs, everything looks ok.

Whew!

Diffing the pbs and lcgbps job managers, YAIM has added DGAS support

for them. I used these as new template modules and repatched the

"completed" job state (https://savannah.cern.ch/bugs/?7874). Helper.pm

was unchanged, so still has the correct patch for stagin via globus.

svr023 / RB

-----------

Ran yum update. Actual RB has not changed, so did not run YAIM

svr018 / DPM

------------

Upgraded YAIM to check on config_DPM_upgrade. Looks quite simple.

Booked downtime for 2pm to do this.

At 2pm: Stopped DPM

yum update

run config_DPM_upgrade YAIM function (updated db). Took ~8 minutes.

Start DPM again

run config_gip YAIM function (publish access details for

cdf/supernemo in info system)

run config_mkgridmap YAIM function (add additional certificates

into gridmap files)

run config_BDII to redo information system

servers)

Checked BDII was ok. It is.

Then went back to site BDII, changing URL to

BDII_DPM_URL="ldap://$DPM_HOST:2170/mds-vo-name=resource,o=grid" -

restarted site BDII. Checked ldap info was ok.

Came up from downtime (took 15 minutes). Damn - we got SAM tested in the interval!

After Lunch

-----------

Wary of APEL changes I read Yves' notes in

http://www.gridpp.ac.uk/wiki/GLite_Update_27. I couldn't see the same

problems. Ran APEL publisher on CE and MON and things seem to be ok,

so let things lie here.

Finally able to lock atlas pool down to atlas members! When I looked

in the Cns_groupmap though, there had been rather an explosion of

atlas groups:

mysql> select * from Cns_groupinfo where groupname like 'atlas%';+-------+------+-----------------------+| rowid | gid | groupname |+-------+------+-----------------------+| 2 | 103 | atlas || 16 | 117 | atlas/Role=lcgadmin || 17 | 118 | atlas/Role=production || 55 | 156 | atlas/lcg1 || 57 | 158 | atlas/usatlas |+-------+------+-----------------------+Hmmm, I have given the pool to all these gids. Is this really

necessary?

Done! Whew!