Alerted by Dave Colling, we found a biomed user who was running about 100 jobs on the cluster all trying to factorise a 768 bit number (and win $50 000 in the process).

Clearly this is abuse of our resources and nothing to do with biomed. They have consumed more than 80 000 normalised CPU hours since September. I'm sure the operational costs of this amount to more than several thousand pounds (should we bill them?).

It was all the more irritating as we had a stack of ATLAS and local users' jobs to run, but the biomed jobs were set to download subjobs from the user's job queue (they were effectively limited pilots) and so they ran right up to the wallclock limit of 36 hours.

I banned the user, deleted their jobs and sent a very angry GGUS ticket.

As a slight aside one notes the efficiency of pilot job systems at hoovering up spare jobs slots and consuming resources on cluster well in excess of the nominal 1% fair share we give to biomed.

Tuesday, October 30, 2007

NGS VO Supported

I should have now fixed the NGS VO on ScotGrid. This was supposed to happen during the SL4 transition, but got lost in the rush to get the site up and running correctly.

LCMAPS now knows to map certificates from the ngs.ac.uk VO to .ngs pool accounts, and the fallback grid-mapfile is being correctly made.

What remains to be sorted out is if we can support NGS without giving shell access. I am loath to turn on pool mapped gsissh as we'd have to hack the grid-mapfile on the UI (to exclude other VOs) and somehow manage to synch the pool account mappings between the UI and the CE.

At the moment I'm sure it will be broken, because we don't even have shared home areas, but even if introduce these I'm not certain it will be a practical proposition to only offer gsiftp.

LCMAPS now knows to map certificates from the ngs.ac.uk VO to .ngs pool accounts, and the fallback grid-mapfile is being correctly made.

What remains to be sorted out is if we can support NGS without giving shell access. I am loath to turn on pool mapped gsissh as we'd have to hack the grid-mapfile on the UI (to exclude other VOs) and somehow manage to synch the pool account mappings between the UI and the CE.

At the moment I'm sure it will be broken, because we don't even have shared home areas, but even if introduce these I'm not certain it will be a practical proposition to only offer gsiftp.

Some Queue Work

I introduced a new long queue last night, which is primarily to support long validation jobs run by some of our Durham phenomenology friends. It has a 7 day CPU/Wall limit. As I was messing about with transitions to queues which support more than 1 VO, I added support for ATLAS on the queue, thinking it might be of use to our local ATLAS users.

Unfortunately, as it was advertised in the information system, and was a perfectly valid and matchable queue, we soon got production and user ATLAS jobs coming in on this long queue. So, tonight, I have stopped advertising it for ATLAS, to push things back to the normal 36 hour limited grid queue.

I also discovered that maui does its "group" fairsharing on the submitting user's primary group. This is pretty obvious, of course, but as we've traditionally had one queue per VO, everyone in that VO in a primary group of the same name, somehow I had it muddled in my mind that it was a queue balancing act instead. Turns out this is in fact good, because we can set different fair shares for users sharing the gridpp queue, as long as we keep them in per-project primary groups.

Unfortunately, as it was advertised in the information system, and was a perfectly valid and matchable queue, we soon got production and user ATLAS jobs coming in on this long queue. So, tonight, I have stopped advertising it for ATLAS, to push things back to the normal 36 hour limited grid queue.

I also discovered that maui does its "group" fairsharing on the submitting user's primary group. This is pretty obvious, of course, but as we've traditionally had one queue per VO, everyone in that VO in a primary group of the same name, somehow I had it muddled in my mind that it was a queue balancing act instead. Turns out this is in fact good, because we can set different fair shares for users sharing the gridpp queue, as long as we keep them in per-project primary groups.

Thursday, October 25, 2007

... and we're back

Glasgow Down - Power Outage

We lost power in the Kelvin Building this morning just before 9am. News is:

Power outage

25/10/07 08:49 BST

We have been informed by Estates and Buildings that there is a power

outage in the Kelvin Building among others. The Kelvin buildings

router provides network services to other buildings and so there will

be no network service in the following buildings:

Kelvin, Davidson, West-medical, Pontecorvo and Anderson colleges,

Joseph-Black, Bower, Estates, Garage, Zoology and shares the medical

school with Boyd-Orr.

Scottish power are working on the problem. Further information as it

becomes available.

We've EGEE broadcast and put in an unscheduled downtime.

Really annoying - we were running along very nicely when this happened (although we'd had to shut down 20 WNs because of the loss of an a/c unit on Tuesday).

Labels:

downtime,

power outage,

UKI-SCOTGRID-GLASGOW

Glasgow site missing, presumed AWOL

This morning uki-scotgrid-glasgow and other systems in the same building are offline. We're not sure of cause yet and wil investigate further once someone's on site.

UPDATE - 09:08 - Confirmed as power cut affecting building (see http://www.gla.ac.uk/services/it/helpdesk/#d.en.9346) No ETA yet for restoring services. EGEE Broadcast sent.

UPDATE - 09:08 - Confirmed as power cut affecting building (see http://www.gla.ac.uk/services/it/helpdesk/#d.en.9346) No ETA yet for restoring services. EGEE Broadcast sent.

Wednesday, October 24, 2007

News from ATLAS Computing

There is some significant news for sites from ATLAS software week. The decision was taken yesterday to move all ATLAS MC production a pilot job system, with the pilots based on the Panda system developed by US ATLAS. The new system will get a new name, pallete and pallas are the front runners. (I like pallas myself.)

In addition EGEE components, such as LFC, will be standardised on for DDM.

As this is a pilot job system, so the anticipated model is that ATLAS production will keep a steady stream of pilots on ATLAS T2 sites, which pull in real job payloads from the central queue.

This is very like the LHCb MC production model, so as the transition is made to this system sites should start to see much better usage of their resources by ATLAS - just like LHCb are able to scavenge resources from all over the grid.

Canada have recently shifted to Panda production, and greatly increased their ATLAS workrate as a result. There have been some trials of the system in the UK and France, which were also very encouraging.

Of course, there is a very large difference with LHCb, because ATLAS don't just do simulation at T2s, but also do digitisation and reconstruction. These steps require input files and the panda based system for dealing with this is to ensure that the relevant input dataset is staged by the ATLAS distributed data management system (DDM) on your local SE; further the output dataset will be created on your local SE, then DDM will ship it up to the Tier-1 after the jobs have run.

This means that sites will really require to have a working storage system for ATLAS work from now on (in the previous EGEE production model, any of the SEs in your cloud could be used, which masked a lot of site problems but caused us huge data management headaches).

In the end using pilots had two compelling advantages. Firstly, the sanity of the environment can be checked before a job is actually run, which means that panda gets 90% job efficiency (the other EGEE executors struggled to reach 70%). Secondly, and this is the clincher, it means that we can prioritise tasks within ATLAS, which is impossible to do otherwise.

At the moment the push will be to get ATLAS production moved to this new system - probably on a cloud by cloud basis. This should not cause the sites headaches as production is a centralised activity (most sites still have a single atlasprd account anyway). However, the pilots can also run user analysis jobs - and this will require glexec functionality. Alessandra and I stressed to Kors that this must be supported in the glexec non suid mode.

In the UK ATLAS community we now need to get our DQ2 VOBox working properly - at the moment dataset subscriptions in the UK are just much too slow right now.

Postscript: I met Joel at lunchtime, who wanted me to namecheck LHCb's DIRAC system, as panda is based on DIRAC - well, I didn't know that, but I suppose I'll learn a lot more about the internals of these things in the next few months.

Monday, October 22, 2007

EGEE: It's broken but it works...

I had meant to blog this a week ago, but I did my first shift for ATLAS EGEE production. This was really being thrown in at the deep end, as the twiki instructions were, well spartan, to say the least. (And definately misleading in places, actually.)

I was on Data Management, which meant concentrating on problems of data stage-in and stage-out, as well as trying to pick up site which had broken tool sets.

It felt like a bit of a wild ride - there are almost always problems of some kind, and part of the art is clearly sorting out the completely urgent must be delt with now, from the simply urgent, down to the deal with this in a quiet moment.

I found problems at T1s (stage-in, overloaded dCaches, flaky LFCs), T2s (SEs down, quite a few broken lcg-utils, some sites just generically not working but giving very strange errors). I raised a lot number of GGUS tickets, but sometimes it's very difficult to know what the underlying problem is, and it's very time consuming batting the ticket back and forth with the site.

It's a very different experience from being on the site side. Instead of a "deep" view of a few sites you have a "shallow" view of almost all of them. If you want to read my round-up of issues though the week, it's on indico (it's the DDM shifter report).

I was on Data Management, which meant concentrating on problems of data stage-in and stage-out, as well as trying to pick up site which had broken tool sets.

It felt like a bit of a wild ride - there are almost always problems of some kind, and part of the art is clearly sorting out the completely urgent must be delt with now, from the simply urgent, down to the deal with this in a quiet moment.

I found problems at T1s (stage-in, overloaded dCaches, flaky LFCs), T2s (SEs down, quite a few broken lcg-utils, some sites just generically not working but giving very strange errors). I raised a lot number of GGUS tickets, but sometimes it's very difficult to know what the underlying problem is, and it's very time consuming batting the ticket back and forth with the site.

It's a very different experience from being on the site side. Instead of a "deep" view of a few sites you have a "shallow" view of almost all of them. If you want to read my round-up of issues though the week, it's on indico (it's the DDM shifter report).

Disk Servers and Power Outages

Your starter for 10TB: how long does it take to do an fsck on 10TB of disk? Answer: about 2 hours.

Which in theory was fine - in at 10am, out of downtime by 12. However, things didn't go quite according to plan.

First problem was that disk038-41, which had been setup most recently, had the weird disk label problem, where the labels had been created with a "1" appended to them. Of course, this wouldn't matter, except that we'd told cfengine to control fstab, based on the older servers (with no such ones), so the new systems could not find their partitions and sat awaiting root intervention. That put those servers back by about an hour.

Secondly, some of the servers were under the impression that they had not checked their disks for ~37 years (mke2fs at the start of the epoch?), so had to be coaxed into doing so by hand, which was another minor hold up.

Third, I had decided to convert all the file systems to ext3, to avoid protect them in the case of power outages. It turns out that making a journal for a 2TB filesystem actually takes about 5 minutes - so 25 minutes for your set of 5.

And, last but not least, the machines needed new kernels, so had to go through a final reboot cycle before they were ready.

The upshot was that we were 15 minutes late with the batch system (disk037 was already running ext3, fortunately), but an hour and 15 minutes late with the SRM. I almost did an EGEE broadcast, but in the end, who's listening Sunday lunchtime? It would just have been more mailbox noise for Monday, and irrelevant by that time anyway.

As the SRMs fill up, of course, disk checks will take longer, so next time I would probably allow 4 hours for fscking, if it's likely.

A few other details:

* The fstab for the disk servers now names partitions explicitly, so no dependence on disk labels.

* The BDII going down hit Durham and Edinburgh with RM failures. Ouch! We should have seen that one coming.

* All the large (now) ext3 partitions have been set to check every 10 mounts or 180 days. The older servers actually had ~360 days of uptime, so if this is an annual event then doing an fsck once a year should be ok.

Which in theory was fine - in at 10am, out of downtime by 12. However, things didn't go quite according to plan.

First problem was that disk038-41, which had been setup most recently, had the weird disk label problem, where the labels had been created with a "1" appended to them. Of course, this wouldn't matter, except that we'd told cfengine to control fstab, based on the older servers (with no such ones), so the new systems could not find their partitions and sat awaiting root intervention. That put those servers back by about an hour.

Secondly, some of the servers were under the impression that they had not checked their disks for ~37 years (mke2fs at the start of the epoch?), so had to be coaxed into doing so by hand, which was another minor hold up.

Third, I had decided to convert all the file systems to ext3, to avoid protect them in the case of power outages. It turns out that making a journal for a 2TB filesystem actually takes about 5 minutes - so 25 minutes for your set of 5.

And, last but not least, the machines needed new kernels, so had to go through a final reboot cycle before they were ready.

The upshot was that we were 15 minutes late with the batch system (disk037 was already running ext3, fortunately), but an hour and 15 minutes late with the SRM. I almost did an EGEE broadcast, but in the end, who's listening Sunday lunchtime? It would just have been more mailbox noise for Monday, and irrelevant by that time anyway.

As the SRMs fill up, of course, disk checks will take longer, so next time I would probably allow 4 hours for fscking, if it's likely.

A few other details:

* The fstab for the disk servers now names partitions explicitly, so no dependence on disk labels.

* The BDII going down hit Durham and Edinburgh with RM failures. Ouch! We should have seen that one coming.

* All the large (now) ext3 partitions have been set to check every 10 mounts or 180 days. The older servers actually had ~360 days of uptime, so if this is an annual event then doing an fsck once a year should be ok.

Sunday, October 21, 2007

Glasgow power outage

Ho Hum, it's that time of year for HV switchgear checking. Rolling programme of work across campus meant that the building housing the glasgow cluster was due for an outage at ungodly-o-clock in the morning. We arranged the outage in advance, booked scheduled downtime. All OK. Then after G had taken some well deserved hols I discovered how dreadful the cic-portal was to send an egee broadcast. I want to tell users of the site it;s going down. Any chance of this in english? RC management? What is 'RC' - doesn't explain it. Then who should I notify? agan no simple descriptions... Grr. Rant..

OK - system went down cleanly easily enough (pdsh -a poweroff or similar) - bringing it back up? hmm. 1st off the LV switchboard needed resetting manually so the UPS has a flat battery. Then one of the PDU's decided to restore all the associated sockets to 'On' without waiting to be told. (so all the servers lept into life before the disks were ready). Then the disk servers decided they needed to fsck (it'd been a year since the last one) - slooooow. Oh, and the disklabels on the system disks were screwed up (/1 and /tmp1 rather than / and /tmp for exmple) - another manual workaround needed.

Finally we were ready to bring the workernodes back - just on the 12:00 deadline. I left graeme still hard at it, but there's a few things we'll need to pull out in a post mortem. I'm sure Graeme will blog some more

OK - system went down cleanly easily enough (pdsh -a poweroff or similar) - bringing it back up? hmm. 1st off the LV switchboard needed resetting manually so the UPS has a flat battery. Then one of the PDU's decided to restore all the associated sockets to 'On' without waiting to be told. (so all the servers lept into life before the disks were ready). Then the disk servers decided they needed to fsck (it'd been a year since the last one) - slooooow. Oh, and the disklabels on the system disks were screwed up (/1 and /tmp1 rather than / and /tmp for exmple) - another manual workaround needed.

Finally we were ready to bring the workernodes back - just on the 12:00 deadline. I left graeme still hard at it, but there's a few things we'll need to pull out in a post mortem. I'm sure Graeme will blog some more

Wednesday, October 10, 2007

svr016 (CE) sick

Our CE appears to have fallen over this morning at 7AM. It's not responding to SSH (well, with a load like that I'm not suprised) - It'll need poking as soon as someone's on site.

Our CE appears to have fallen over this morning at 7AM. It's not responding to SSH (well, with a load like that I'm not suprised) - It'll need poking as soon as someone's on site. UPDATE - 10:00. Unresponsive to even the 'special' keyboard we keep for such events. Needed the big red button pressing. Seems to have come back OK. Monitoring for fallout.

UPDATE - 10:00. Unresponsive to even the 'special' keyboard we keep for such events. Needed the big red button pressing. Seems to have come back OK. Monitoring for fallout.

Monday, October 08, 2007

RB Corrupts its Database

Our RB (svr023) seriously died today.

I was alerted to a problem by a pheno user who was having trouble submitting jobs. When I checked the RB I found that the root partition was full, choked by massive /var/log/wtmp files and by an extremely large RB database in /var/lib/mysql.

When had reinstalled the RB 2 weeks ago I had ensured that /var/edgwl was in a large disk area, but to have more than 4GB filled up in less than 2 weeks by the other denizens of /var was completely unexpected.

Emergency procedure was then to move /var/log and /var/lib/mysql over to the /disk partition, creating soft links pointing from the old locations.

This seemed to be going ok, but job submission was still failing. When I checked the error log for mysql I got the message:

071008 16:25:32 [ERROR] /usr/sbin/mysqld: Can't open file: 'short_fields.MYI' (errno: 145)

The short_fields table definitely existed. I even checked it against the last version in /var. Logging in to mysql demonstrated that this table had become corrupted.

I toyed with the idea of trying to save the database, however it would probably have left us with an internally inconsistent RB database, as well as orphaned files in the /var/edgwl bookkeeing area.

Reluctantly I decided that the only sensible recourse was to reinstall svr023, and take the hit of the lost jobs.

This has now been done, and normal service has been resumed.

In the course of the re-install the /var partition has been grown to more than 100GB, which should protect us against large log files for quite a time.

Frankly, all very annoying and I'm quite upset that we lost users' jobs.

Sorry folks. It shouldn't happen again.

I was alerted to a problem by a pheno user who was having trouble submitting jobs. When I checked the RB I found that the root partition was full, choked by massive /var/log/wtmp files and by an extremely large RB database in /var/lib/mysql.

When had reinstalled the RB 2 weeks ago I had ensured that /var/edgwl was in a large disk area, but to have more than 4GB filled up in less than 2 weeks by the other denizens of /var was completely unexpected.

Emergency procedure was then to move /var/log and /var/lib/mysql over to the /disk partition, creating soft links pointing from the old locations.

This seemed to be going ok, but job submission was still failing. When I checked the error log for mysql I got the message:

071008 16:25:32 [ERROR] /usr/sbin/mysqld: Can't open file: 'short_fields.MYI' (errno: 145)

The short_fields table definitely existed. I even checked it against the last version in /var. Logging in to mysql demonstrated that this table had become corrupted.

I toyed with the idea of trying to save the database, however it would probably have left us with an internally inconsistent RB database, as well as orphaned files in the /var/edgwl bookkeeing area.

Reluctantly I decided that the only sensible recourse was to reinstall svr023, and take the hit of the lost jobs.

This has now been done, and normal service has been resumed.

In the course of the re-install the /var partition has been grown to more than 100GB, which should protect us against large log files for quite a time.

Frankly, all very annoying and I'm quite upset that we lost users' jobs.

Sorry folks. It shouldn't happen again.

MPI Running Properly at Glasgow

I was very keen to take up Stephen Child's offer to get MPI enabled

properly at Glasgow. (See link for my last attempts at this working,

where I cobbled together something that was far from satisfactory, but

at least proved it was possible in theory to get this to work.)

A problem for MPI at Glasgow is that our pool account home directories

are not shared and that jobs all wake up in /tmp anyway. For local

users we offer the /cluster/share area, which gets around this, but

what to do for generic MPI jobs? We decided it would be a very good

idea to offer some shared area for MPI jobs, and that the right

strategy would be to modify mpi-start to pick the job up by its

bootstraps and drop itself back down into a shared directory area in

/cluster/share/mpi. To do this we decided to generalise the

MPI_SHARED_HOME environment variable. Previously this had been "yes"

or "no", but in the new scheme if it points to a directory then the

script transplants the job to an appropriate subdirectory of this

area.

On the site side I had to make site all the MPI environment variables

were properly defined in the job's environment (which we do with

/etc/profile.d/mpi.sh) and advertise the right MPI attributes in the

information system.

It all went pretty well, until we had an issue with mpiexec not being

able to invoke the other job threads properly. (Mpiexec starts the

other job threads via torque, which means they get accounted for

properly and that we can disable passwordless ssh between the WNs

- which we did at the SL4 upgrade). There was a fear it was due to some

weird torque build problem, but in the end it was a simple issue with

server_name not being properly defined on the workers. A quick but of

cfengine and this was then fixed.

So, Glasgow now supports MPI jobs - excellent. (He will rebuild the

newly featured mpi-start and release it next week.)

Big thanks to Stephen for setting this up for us.

properly at Glasgow. (See link for my last attempts at this working,

where I cobbled together something that was far from satisfactory, but

at least proved it was possible in theory to get this to work.)

A problem for MPI at Glasgow is that our pool account home directories

are not shared and that jobs all wake up in /tmp anyway. For local

users we offer the /cluster/share area, which gets around this, but

what to do for generic MPI jobs? We decided it would be a very good

idea to offer some shared area for MPI jobs, and that the right

strategy would be to modify mpi-start to pick the job up by its

bootstraps and drop itself back down into a shared directory area in

/cluster/share/mpi. To do this we decided to generalise the

MPI_SHARED_HOME environment variable. Previously this had been "yes"

or "no", but in the new scheme if it points to a directory then the

script transplants the job to an appropriate subdirectory of this

area.

On the site side I had to make site all the MPI environment variables

were properly defined in the job's environment (which we do with

/etc/profile.d/mpi.sh) and advertise the right MPI attributes in the

information system.

It all went pretty well, until we had an issue with mpiexec not being

able to invoke the other job threads properly. (Mpiexec starts the

other job threads via torque, which means they get accounted for

properly and that we can disable passwordless ssh between the WNs

- which we did at the SL4 upgrade). There was a fear it was due to some

weird torque build problem, but in the end it was a simple issue with

server_name not being properly defined on the workers. A quick but of

cfengine and this was then fixed.

So, Glasgow now supports MPI jobs - excellent. (He will rebuild the

newly featured mpi-start and release it next week.)

Big thanks to Stephen for setting this up for us.

Lunch with glexec developers

We had a very interesting lunchtime meeting with the glexec developers

on Tuesday lunchtime (organised thanks to Alessandra's efforts in the TCG to convince the developers that the sites had serious issues with glexec). The developers very

happy to meet us and discuss the orgins of glexec and why they thought

that it was needed. It was clear that no-one in the meeting is at all

keen on generic pilot job frameworks. However, what's also clear is

that the LHC VOs are going to insist on having them and it's highly

unlikely that sites would ever be able to exert enough influence on

them to stop. However, what we can do is insist that if such

frameworks do exist, then they will have to use a glexec call and

respect its result. What glexec gives, in essence, is sudo like

abilities, but integrated into an X509 based authorisation scheme (and

it uses LCAS/LCMAPS plugins, which we are familiar with). The

advantages to the sites are that (1) there is a lot of control as to

who can call glexec in the first place, e.g., restricting this only to

production roles; (2) using LCMAPS single users can be banned, whereas

without glexec there only option with misbehaving pilot jobs is to ban

the pilot user on the CE (which is tantamount to banning the whole

VO); (3) there's an audit trail of who's payload has been executed

(which is crucial). glexec has safeguards to stop multiple calls

(i.e., the payload recalling glexec).

Then, to suid or not? We are assured that glexec will be distributed

in two flavours - one with the suid bit switched on in the RPM, the

other with it switched off. Of course, post-installation, one can

easily flip the bit using cfengine. The danger of not enabling suid is

that it will be possible for the payload job to access the submission

proxy certificate (danger for the VO) and that sorting out the payload

from the pilot is harder at the process level (danger for the

site). Of course, this has to be balanced by the danger of enabling

suid and risking a possible avenue of privilege escalation should

glexec turn out to have a security problem.

I'm still convinced that for ScotGrid deploying glexec in non-suid

form is best. We can run it for a while and then evaluate the

situation further. It's clear that sites like ECDF will never allow

glexec to be suid for them - so running it in non-suid mode will

always be an option.

The security vulnerabilities that Kostas identified have all been

fixed - along with more potential problems they spotted when going

through the code. They are very happy for other people to look at the

code and feedback problems to them.

Overall I was very impressed at the developers' openness. I still

don't think generic pilots are a good idea for the grid, but in a

world where they exist glexec is a definite help.

on Tuesday lunchtime (organised thanks to Alessandra's efforts in the TCG to convince the developers that the sites had serious issues with glexec). The developers very

happy to meet us and discuss the orgins of glexec and why they thought

that it was needed. It was clear that no-one in the meeting is at all

keen on generic pilot job frameworks. However, what's also clear is

that the LHC VOs are going to insist on having them and it's highly

unlikely that sites would ever be able to exert enough influence on

them to stop. However, what we can do is insist that if such

frameworks do exist, then they will have to use a glexec call and

respect its result. What glexec gives, in essence, is sudo like

abilities, but integrated into an X509 based authorisation scheme (and

it uses LCAS/LCMAPS plugins, which we are familiar with). The

advantages to the sites are that (1) there is a lot of control as to

who can call glexec in the first place, e.g., restricting this only to

production roles; (2) using LCMAPS single users can be banned, whereas

without glexec there only option with misbehaving pilot jobs is to ban

the pilot user on the CE (which is tantamount to banning the whole

VO); (3) there's an audit trail of who's payload has been executed

(which is crucial). glexec has safeguards to stop multiple calls

(i.e., the payload recalling glexec).

Then, to suid or not? We are assured that glexec will be distributed

in two flavours - one with the suid bit switched on in the RPM, the

other with it switched off. Of course, post-installation, one can

easily flip the bit using cfengine. The danger of not enabling suid is

that it will be possible for the payload job to access the submission

proxy certificate (danger for the VO) and that sorting out the payload

from the pilot is harder at the process level (danger for the

site). Of course, this has to be balanced by the danger of enabling

suid and risking a possible avenue of privilege escalation should

glexec turn out to have a security problem.

I'm still convinced that for ScotGrid deploying glexec in non-suid

form is best. We can run it for a while and then evaluate the

situation further. It's clear that sites like ECDF will never allow

glexec to be suid for them - so running it in non-suid mode will

always be an option.

The security vulnerabilities that Kostas identified have all been

fixed - along with more potential problems they spotted when going

through the code. They are very happy for other people to look at the

code and feedback problems to them.

Overall I was very impressed at the developers' openness. I still

don't think generic pilots are a good idea for the grid, but in a

world where they exist glexec is a definite help.

EGEE 07 Round-up

Here's a round-up of notes and interesting bits

EGI (European Grid Infrastructure) was a big theme during the conference. This is expected to be the permanent grid infrastructure replacement which arrives after the end of EGEE III. There's lots to be worked out, not least of which is the need for a sustainable national grid infrastructure (NGI) in each country or region.

NA4

Workbench Overview: Taverna; Review; There will always be a zoo of workflow engines. Which one to choose? No answer yet! Information system - need to engage GLUE. Pushes pain from workflow people to middleware providers?

Lots of portals and workflows out there - Taverna, P-Grade, Ganga/Diane.

Unfortunately because of SA3 and SA1 sessions didn't get to see the demos.

SA3

gLite release process: should patches be bundled or not? can we divide

patches into the simple RPM updates and more complex changes requiring

reconfiguration.

YAIM 4 is coming. Proper component based YAIM - heirarchy of

configuration files (site general -> component specific). Should be

much better. Has _pre and _post function hooks - much more flexible to

override aspects of YAIM, but not lose core functionality.

SA1

Ian Bird: Good overview of operations. Big scale up next year with the LHC. Transition to EGI and RGIs will be a very big challenge.

JRA1

gLite Overview:

See Claudio's presentation. Note that for highest performance should

separate LB and WMS. Well, for an initial trial service this is

probably not needed (not supporting 12000 jobs a day!). Time scale for

porting components to SL4 i386 is now (roughly) known.

x86_64 expected to be complete next spring (but DPM/LFC already

there).

OMII Europe:

I sat in on this as it was a project I had heard a lot about, but didn't know what they did. They have partners in US and Asia and do this:

Adding BES support to CREAM. (Might _eventually_ lead to dropping native CREAM interface, but not soon - WMS uses this). BES is the proposed OGF standard for job submission.

Adding SAML support to VOMS. (Security Assertion Markup Language: http://en.wikipedia.org/wiki/SAML).

Involved in Glue 2.0. Will help with schema production in XML and LDAP and assist standardisation efforts.

EGI (European Grid Infrastructure) was a big theme during the conference. This is expected to be the permanent grid infrastructure replacement which arrives after the end of EGEE III. There's lots to be worked out, not least of which is the need for a sustainable national grid infrastructure (NGI) in each country or region.

NA4

Workbench Overview: Taverna; Review; There will always be a zoo of workflow engines. Which one to choose? No answer yet! Information system - need to engage GLUE. Pushes pain from workflow people to middleware providers?

Lots of portals and workflows out there - Taverna, P-Grade, Ganga/Diane.

Unfortunately because of SA3 and SA1 sessions didn't get to see the demos.

SA3

gLite release process: should patches be bundled or not? can we divide

patches into the simple RPM updates and more complex changes requiring

reconfiguration.

YAIM 4 is coming. Proper component based YAIM - heirarchy of

configuration files (site general -> component specific). Should be

much better. Has _pre and _post function hooks - much more flexible to

override aspects of YAIM, but not lose core functionality.

SA1

Ian Bird: Good overview of operations. Big scale up next year with the LHC. Transition to EGI and RGIs will be a very big challenge.

JRA1

gLite Overview:

See Claudio's presentation. Note that for highest performance should

separate LB and WMS. Well, for an initial trial service this is

probably not needed (not supporting 12000 jobs a day!). Time scale for

porting components to SL4 i386 is now (roughly) known.

x86_64 expected to be complete next spring (but DPM/LFC already

there).

OMII Europe:

I sat in on this as it was a project I had heard a lot about, but didn't know what they did. They have partners in US and Asia and do this:

Adding BES support to CREAM. (Might _eventually_ lead to dropping native CREAM interface, but not soon - WMS uses this). BES is the proposed OGF standard for job submission.

Adding SAML support to VOMS. (Security Assertion Markup Language: http://en.wikipedia.org/wiki/SAML).

Involved in Glue 2.0. Will help with schema production in XML and LDAP and assist standardisation efforts.

Friday, October 05, 2007

knotty nat knowledge

hmm, thats odd, why aren't the NAT boxes visible on ganglia?

Seemed a simple enough problem - they used to be there, but for some reason fell off the plots late August.

Had boxes restarted and failed to start gmond? nope - good uptime. gmond running? yep. Telnet to gmond port? yep. hmm.

<CLUSTER NAME="NAT Boxes" ... >

</CLUSTER>

and no HOST or METRIC lines between them. Most odd. After some discussion with Dr Millar it turned out to be a probable issue with the Linux Multicast setup - the kernel wasn't choosing the same interface to listen and send on. Luckily this was patched in a newer version of ganglia - the config file supports the mcast_if parameter to allow explicit setting (in our case to the internal ones).

Sadly of course the out-of-the box RPM doesn't install on SL4 x86_64 - needs unmet dependencies (as normal....) so a quick compile on one of the worker nodes and some dirty-hackery-copying the binary over worked a treat. We now have natbox stats again..

Seemed a simple enough problem - they used to be there, but for some reason fell off the plots late August.

Had boxes restarted and failed to start gmond? nope - good uptime. gmond running? yep. Telnet to gmond port? yep. hmm.

<CLUSTER NAME="NAT Boxes" ... >

</CLUSTER>

and no HOST or METRIC lines between them. Most odd. After some discussion with Dr Millar it turned out to be a probable issue with the Linux Multicast setup - the kernel wasn't choosing the same interface to listen and send on. Luckily this was patched in a newer version of ganglia - the config file supports the mcast_if parameter to allow explicit setting (in our case to the internal ones).

Sadly of course the out-of-the box RPM doesn't install on SL4 x86_64 - needs unmet dependencies (as normal....) so a quick compile on one of the worker nodes and some dirty-hackery-copying the binary over worked a treat. We now have natbox stats again..

Monday, October 01, 2007

NFS does TCP (unexpectedly)

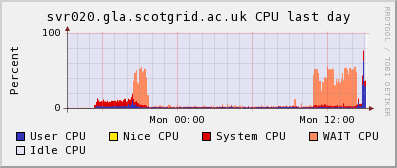

We got alerted by our users to that fact that svr020 was going very, very slowly. When I logged in it took more than a minute and a simple ls on one of the nfs areas was taking ~20s.

Naturally these damn things always happen when you're away and sitting with a 15% battery in a session which is actually of interest!

Anyway, I couldn't see what was wrong on svr020 - the sluggishness seemed symptomatic rather than tied to any bad process or user. There were clear periods when we hit serious CPU Wait. When I checked on disk037 I found many, many error messages like:

nfsd: too many open TCP sockets, consider increasing the number of nfsd threads

nfsd: last TCP connect from 10.141.0.101:816

When I checked on the worker nodes, sure enough, we seemed to have inadvertently switched to using tcp for nfs - I later found out this is the default mount option on 2.6 kernels (which we've obviously just switched to).

I decided to follow the advice in the error message, and run more nfsd threads, so I created a file /etc/sysconfig/nfs, with RPCNFSDCOUNT=24 (the default is 8). I then (rather nervously) restarted nfs on disk037.

Having done this, the load on svr020 returned to normal and the kernel error messages stopped on disk037. Whew!

Relief could clearly be seen, with the cluster load dropping back down to the number of running processes, instead of being "overloaded" as it had been.

We should use MonAMI to monitor the number of nfs tcp connections on disk037. (Just out of interest the number now is 343, which must have caused problems for 8 nfs server daemons; hence, 24 should scale to ~900 mounts.)

Of course, we could shift back to udp nfs mounts if we wanted. Reading through the NFS FAQ might help us decide.

Torque Queue ACLs

I got an email from Rod Walker on Friday night. He was having trouble submitting to the ATLAS queue on the cluster - again the infamously unhelpful "Unspecified gridmanager error".

I checked his mapping, and LCMAPS was correctly mapping him to one of the new atlas production accounts correctly. However, when I looked that the queue, the torque queue configuration had lost the ACL which allowed sgm and prd accounts to submit to it.

I corrected that and all was well again.

I think that probably these ACLs were never correctly set on the cluster as they seemed to be missing on most of the queues (notably the ops queue was not affected). The cfengine script to setup the queues had the correct ACL setup in it, but I guess it had never been run.

The effect on ATLAS services was notable - we ran an awful lot more ATLAS production this weekend (blue jobs).

I checked his mapping, and LCMAPS was correctly mapping him to one of the new atlas production accounts correctly. However, when I looked that the queue, the torque queue configuration had lost the ACL which allowed sgm and prd accounts to submit to it.

I corrected that and all was well again.

I think that probably these ACLs were never correctly set on the cluster as they seemed to be missing on most of the queues (notably the ops queue was not affected). The cfengine script to setup the queues had the correct ACL setup in it, but I guess it had never been run.

The effect on ATLAS services was notable - we ran an awful lot more ATLAS production this weekend (blue jobs).

Subscribe to:

Comments (Atom)