Mark, Phil and Nigel have enabled maui fairshares on the Durham cluster.

This is excellent news and should help them get better numbers on, e.g., Steve Lloyd's ATLAS tests.

I'm not quite sure how fair shares and pre-emption interact - I assume that they're somewhat independent.

Monday, April 30, 2007

Grieg re-testing DPM 1.6.3 rfio

Thanks to Greig for retesting our DPM 1.6.3's rfio.

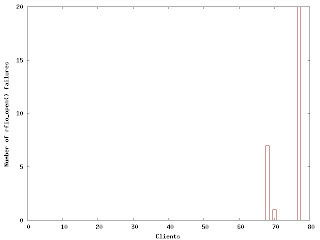

The good news is that the code changes from 1.5.10 drastically reduce the number of rfio_open() failures. These now only crop up at about 70 clients (all within 1 second). The average open time is also much more stable, never rising above 2.5s.

However, we now see a problem with io wait on the disk server, and the total LAN rate struggles to reach 200MB/s, where as 1.5.10 easily reached 400Mb/s.

This remains to be investigated, but the only change on the disk servers is the update of DPM version, so I think it must be a DPM issue.

Biomed and VOMS

We got a ticket from Biomed about their jobs not running properly.

I had a suspicion that this was the case, and when I went to the yaim tool site and found that not only had they changed their VOMS certificate, which I had updated, but they had also changed their VOMS server's DN.

So, I changed their VOMS DN in our site-info.def and re-ran the config_mkgridmap function on the CE, which also configures LCMAPS. After that things seemed fine.

One to watch out for, though, when updated VOMS certificates come through.

I had a suspicion that this was the case, and when I went to the yaim tool site and found that not only had they changed their VOMS certificate, which I had updated, but they had also changed their VOMS server's DN.

So, I changed their VOMS DN in our site-info.def and re-ran the config_mkgridmap function on the CE, which also configures LCMAPS. After that things seemed fine.

One to watch out for, though, when updated VOMS certificates come through.

Intervention At Edinburgh

I had to intervene at Edinburgh 2 weeks ago (14th, just before I went to London for the T2 review). They had been failing JS since the Friday night. Logging on I could see a stack of ops jobs, but nothing running on several WNs.

I tried starting the oldest ops job using runjob -cx, but that didn't work, giving the error:

04/14/2007 16:54:30;0080;PBS_Server;Req;req_reject;Reject reply code=15057(Cannot execute at specified host because of checkpoint or stagein files), aux=0, type=RunJob, from root@ce.epcc.ed.ac.uk

Not at all clear to me what was going on. I tried running different ops jobs and they all started and ran properly, so in the end I deleted that job from the queue and that seemed to ungunge things.

torque seems to produce rather unhelpful information in these sort of cases, unless I'm just looking in the wrong places.

I tried starting the oldest ops job using runjob -cx, but that didn't work, giving the error:

04/14/2007 16:54:30;0080;PBS_Server;Req;req_reject;Reject reply code=15057(Cannot execute at specified host because of checkpoint or stagein files), aux=0, type=RunJob, from root@ce.epcc.ed.ac.uk

Not at all clear to me what was going on. I tried running different ops jobs and they all started and ran properly, so in the end I deleted that job from the queue and that seemed to ungunge things.

torque seems to produce rather unhelpful information in these sort of cases, unless I'm just looking in the wrong places.

Tuesday, April 24, 2007

iperf redux

Glasgow Worker Nodes Filled Up

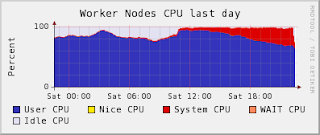

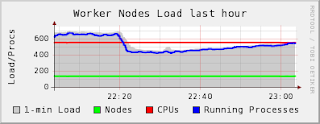

Browsing the Glasgow gangila plots on Saturday night I noticed a very weird situation, where the load was going over the number of job slots and an increasing amount of CPU was being consumed by the system.

Browsing the Glasgow gangila plots on Saturday night I noticed a very weird situation, where the load was going over the number of job slots and an increasing amount of CPU was being consumed by the system.It took a while to work out what was going on, but I eventually tracked it down to /tmp on certain worker nodes getting full - there was an out of control athena.log file in one ATLAS user's jobs which was reaching >50GB. Once /tmp was full it crippled the worker node and other jobs could not start properly - atlasprd jobs untarring into /tmp stalled and the system CPU went through the roof.

It was a serious problem to recover from this - it required the offending user's jobs to be canceled, and a script to be written which cleared out the /tmp space. After that the stalled jobs also had to be qdeled, because they could not recover.

This did work - the load comes back down under the red line and then fills back up as working jobs come in as can be seen from the ganglia plots.

This clearout was done between 2230 and 2400 in a Saturday night, which royally p***ed me off - but I knew that if I left it until Monday the whole site would be crippled.

I raised a GGUS ticket against the offending user. Naturally there wasn't a response until Monday, however it did prove that it is possible to contact a VO user through GGUS.

Lessons to learn: we clearly need to monitor disk on the worker nodes, both /home and /tmp. The natural route to do this is is through MonAMI, with trends monitored in ganglia and alarms in nagios. Of course, we need to get nagios working again on svr031 - the president's brain will be re-inserted next week! In addition, perhaps we want at least a group quota on /tmp, so that VOs can kill themselves but not other users.

Blogger Catchup

Networking problems and the LT2 reviews have meant that it's been really hard to blog in the last week. Now trying to catchup, so hang on to your hats...

Monday, April 16, 2007

Database housekeeping

I had to perform some housekeeping on the dCache postgreSQL database today. Auto-vacuuming was not enabled by default and postgres was starting to consume lots of CPU cycles. More information over on the storage blog:

http://gridpp-storage.blogspot.com/2007/04/postgresql-housekeeping.html

http://gridpp-storage.blogspot.com/2007/04/postgresql-housekeeping.html

Wednesday, April 11, 2007

Edinburgh storage woes

Edinburgh has been suffering lately from a number of failures in the SAM replica management tests. Looking at the dCache webpages reveals the reason:

http://srm.epcc.ed.ac.uk:2288/usageInfo

Rather than displaying the usual pool usage information, the table contained entries like this:

pool1_04 pool1Domain [99] Repository got lost

The "Repository got lost" error can be explained as follows. The dCache periodically runs a background process which attempts to write a small test file onto each pool in an attempt to check that it is still operational. If this fails then the above error message will be generated. According to the dCache developers this will occur if there is a filesystem problem or a disk is not responding quickly enough.

What is strange, however, is that the recent problems have resulted in all of our dCache pools as being marked with the above error. It seems strange that the same filesystem or disk issue would simultaneously affect all of the pools. I have submitted a ticket to dCache support in an attempt to get more information.

The problem can eaily be fixed by restarting the pool process on the affected dCache pool node. In the above case it is pool1.epcc.ed.ac.uk since the pool in question is named pool1_04.

service dcache-pool restart

I have started to add material to this page

http://www.gridpp.ac.uk/wiki/Edinburgh_dCache_troubleshooting

to desribe our dCache setup in more detail. This should give people a better idea of how a working system should be configured. I will add more information when I have time.

http://srm.epcc.ed.ac.uk:2288/usageInfo

Rather than displaying the usual pool usage information, the table contained entries like this:

pool1_04 pool1Domain [99] Repository got lost

The "Repository got lost" error can be explained as follows. The dCache periodically runs a background process which attempts to write a small test file onto each pool in an attempt to check that it is still operational. If this fails then the above error message will be generated. According to the dCache developers this will occur if there is a filesystem problem or a disk is not responding quickly enough.

What is strange, however, is that the recent problems have resulted in all of our dCache pools as being marked with the above error. It seems strange that the same filesystem or disk issue would simultaneously affect all of the pools. I have submitted a ticket to dCache support in an attempt to get more information.

The problem can eaily be fixed by restarting the pool process on the affected dCache pool node. In the above case it is pool1.epcc.ed.ac.uk since the pool in question is named pool1_04.

service dcache-pool restart

I have started to add material to this page

http://www.gridpp.ac.uk/wiki/Edinburgh_dCache_troubleshooting

to desribe our dCache setup in more detail. This should give people a better idea of how a working system should be configured. I will add more information when I have time.

Thursday, April 05, 2007

Server Spread

Scotgrid-Glasgow continues to grow. Not only do we have the vobox for a user group, but we now have a brace of test Scientific Linux 5 servers kickstarted and ready to go. Changes that we needed to make to our existing setup were minimal, the main one being the need to add noipv6 to the PXE command line, otherwise the installer hunts (and fails) for an IPv6 address by DHCP.

I have also added a "sl5" flavour to our local repository for Misc Stuff RPMs (such as cfengine) in both i386 and x86_64. One niggle is that you have to run createrepo once in each location if you use the $basearch variable in the repo config source.

Next step - install apache onto one of the boxes, tweak config and see if it has all the correct mirroring arrangements and replace the brain dead svr031 versiom.

I have also added a "sl5" flavour to our local repository for Misc Stuff RPMs (such as cfengine) in both i386 and x86_64. One niggle is that you have to run createrepo once in each location if you use the $basearch variable in the repo config source.

Next step - install apache onto one of the boxes, tweak config and see if it has all the correct mirroring arrangements and replace the brain dead svr031 versiom.

Labels:

cfengine,

Fabric Management,

UKI-SCOTGRID-GLASGOW

Wednesday, April 04, 2007

APEL Configuration Twiddle

Reviewing some of the ScotGrid status pages I noticed we hadn't published accounting data for about a week. Trying to run APEL by hand revealed why - it was set to use the old sBDII on svr016 instead of the new one on svr021. This had been running on for months, even though the site's published GIIS endpoint had been changed to svr021 months ago - and I had finally switched it off about a week ago.

Once this was corrected (in /opt/glite/etc/glite-apel-pbs/parser-config-yaim.xml) things ran through fine.

Once this was corrected (in /opt/glite/etc/glite-apel-pbs/parser-config-yaim.xml) things ran through fine.

Defining SPEC Values for the Cluster

I had a long discussion with Mark about getting the SPEC values correct for Durham. There's no really good answer to this apart from go to the SPEC Website and try and find machines with the same processor types and vintage as your own (ideally with the same motherboard). N.B. One should really use the "base" values - these have a conservative set of compiler flags so are more appropriate for pre-compiled EGEE applications - the peak values enable all the bells and whistles on the compiler.

I was also prompted to look at the numbers I had put in for the new Glasgow cluster. Here we have Opteron 280s. There are now 10 measurements for the SI2K of these machines - these are all very close and average to 1533, so that's what I have now put (up slightly from 1450). The FP2K values have a bigger spread (different chipsets?), but in the absence of any guide I again took the average, which was 1770.

I also noticed that CPU2000 has now officially been retired - replaced by CPU2006. This is going to be a problem as CPU2000 will not be available for newer machines, but CPU2006 will not be available for older ones. How do you express that in your JDL?

I was also prompted to look at the numbers I had put in for the new Glasgow cluster. Here we have Opteron 280s. There are now 10 measurements for the SI2K of these machines - these are all very close and average to 1533, so that's what I have now put (up slightly from 1450). The FP2K values have a bigger spread (different chipsets?), but in the absence of any guide I again took the average, which was 1770.

I also noticed that CPU2000 has now officially been retired - replaced by CPU2006. This is going to be a problem as CPU2000 will not be available for newer machines, but CPU2006 will not be available for older ones. How do you express that in your JDL?

Labels:

Accounting,

SPEC,

UKI-SCOTGRID-DURHAM,

UKI-SCOTGRID-GLASGOW

Tuesday, April 03, 2007

DPM GRIS goes nuts at Glasgow

I was amazed to find that Glasgow was in a gstat warn condition this evening, because we were reporting 0GB storage available.

When I checked on the SE the plugin was able to run fine, but it didn't seem to be being run properly - so everything was reporting zeros.

I tried restarting the GRIS, but this didn't work (it had been running since last year), so I had to kill off the process and then restart it. Finally, for good measure I restarted rgma-gin, which is one of the plugins providing the dynamic information.

Still, it took a few minutes before the GRIS started to properly provide the correct information.

However, from 6pm until 10pm we apparently had no storage.

Any thoughts on how to monitor the information system? Well, we did go into "warn" status on the SAM sBDII seavail test, so we should ensure that gets trapped by Paul's MonAMI SAM feeds.

Labels:

BDII,

Reliabilty,

Storage,

UKI-SCOTGRID-GLASGOW

Disabling SAME/R-GMA in the Job Wrapper

Alessandra raised a GGUS ticket about the R-GMA client issues in the job wrapper. She got a rapid response, along with a recipe to disable them:

I have now put in the necessary cfengine stanza to delete the links and stop this nonsense:

Now, how big a difference does it make? Quite a lot for short jobs - the wallclock time for a simple globus-job-run has gone down from 5 minutes to 3 seconds!

The total time for the jobmanager to handle the job has remained quite high - 1m30s c.f. 16s for the pbs jobmanager. However, at least no one is going to be "charged" for the time that the job is with the gatekeeper, unlike the time spent in the batch queue.

Unfortunately this is a known problem with SAM CE JobWrapper Tests and R-GMA. We are using R-GMA command line utility to publish a small piece of data from worker nodes but unfortunately sometimes R-GMA hangs for quite a lot of time.

We are planning a new release of JobWrapper tests without R-GMA publishing (replaced completely by our internal SAM/GridView transport mechanism). But for the time being the only solution is to disable JobWrapper tests on your site if you observe such a behaviour.

To do this you have to remove all the symlinks that appear in the following two directories on all WNs:

$LCG_LOCATION/etc/jobwrapper-start.d

$LCG_LOCATION/etc/jobwrapper-end.d

I have now put in the necessary cfengine stanza to delete the links and stop this nonsense:

disable:

worker::

# Disable the SAM wrapper which uses R-GMA

/opt/lcg/etc/jobwrapper-start.d/01-same.start

/opt/lcg/etc/jobwrapper-end.d/01-same.end

Now, how big a difference does it make? Quite a lot for short jobs - the wallclock time for a simple globus-job-run has gone down from 5 minutes to 3 seconds!

The total time for the jobmanager to handle the job has remained quite high - 1m30s c.f. 16s for the pbs jobmanager. However, at least no one is going to be "charged" for the time that the job is with the gatekeeper, unlike the time spent in the batch queue.

GRIS Wobbles in Glasgow CE

Between 12 and 1pm yesterday the site BDII stopped reporting on the queue statuses. We suffered the classic problem of reporting 4444 queued jobs and 0 job slots available.

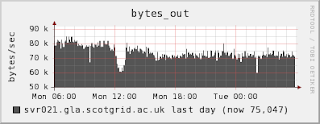

You can see from the plots from the sBDII that the amount of network traffic clearly dips. In fact the gstat graphs show clear a dip in the number of entries. So it was the CE's GRIS was misbehaving at this point.

I checked the CE for load, job floods, etc. There was nothing abnormal - we got 20 jobs in 1 minute, but we should be able to cope with that ok. There are no logs for the running slap daemon and nothing odd spotted in /var/log/messages.

We also aborted on a few of Steve Lloyd's tests. However, very weirdly the RB logs are showing an attempt to match a queue on host gla.scotgrid.ac.uk. Where did the name of the CE go? Is this related to the CE GRIS getting in a pickle?

So, one of these anomalous blips in the crappy information system again.

Subscribe to:

Comments (Atom)