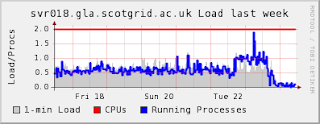

Glasgow is finally out of downtime. GS worked his grid-fu and managed to upgrade lots to SL4 - Admittedly some things (RGMA) weren't a goer. APEL Accounting could be broken for a while as we've now split the CE (new home = svr021) and the Torque server (still on svr016). My 'simple' job was to take care of the DPM servers...

Simple enough, we hacked into the YAIM site-info.def stuff and separated things out into services/ and vo.d/ - easy. Few gotchas as cfengine was once again reluctant to create the symlinks on the target nodes (however creating the symlinks on the master and replicating those works fine) which we thought

might be fixed by an upgrade of cfengine from 2.1.22 to 2.2.3. Big mistake. it broke HostRange function of cfengine.

so we have

dpmdisk = ( HostRange(disk,032-036) HostRange(disk,038-041) )

but cfengine complained that

SRDEBUG FuzzyHostParse(disk,032-041) succeeded for disk033.gla.scotgrid.ac.uk

SRDEBUG FuzzyHostMatch: split refhost=disk033.gla.scotgrid.ac.uk into refbase=disk033.gla.scotgrid.ac.uk and cmp=-1

SRDEBUG FuzzyHostMatch(disk,032-041,disk033.gla.scotgrid.ac.uk) failed

now I'm not sure if this is due to the problem of short hostname vs FQDN - I've hit a similar issue when I want to copy iptables configs off -

$(skel)/nat/etc/iptables.$(host) mode=0600 dest=/etc/iptables define=newiptables type=sum

needs iptables.host.gla.scotgrid.ac.uk not just iptables.host on the master repo.

Anyway, this all seems trivial compared to the hassle with the latest SLC 4X that got mirrored up to the servers overnight (the disk servers run SLC4 rather than SL4 as the Areca raid card drivers are compiled in) - dpm-queryconf kept failing with

send2nsd: NS002 - send error : No valid credential found

and yet the certificates were there and valid - openssl verify ... returned OK, ddates were valid, NTP installed etc. The dpm log showed

dpm_serv: Could not establish security context: _Csec_recv_token: Connection dropped by remote end !

The really frustrating thing was that the server that I installed from home while munching breakfast (all hail laptops and broadband) worked fine, but those I installed (and reinstalled) later in the office were broken. [hmm. is this a sign that I should stay at home in the mornings and have a leisurely breakfast?]

Puzzling was the fact that the

broken servers had more rpms installed than the working ones. - I eventually resorted to installing strace on both boxes and diffing the output of 'strace dpm-qryconf'

the failing one had a big chunk of

open("/lib/tls/i686/sse2/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/lib/tls/i686/sse2", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/lib/tls/i686/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/lib/tls/i686", {st_mode=S_IFDIR|0755, st_size=4096, ...}) = 0

open("/lib/tls/sse2/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/lib/tls/sse2", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/lib/tls/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/lib/tls", {st_mode=S_IFDIR|0755, st_size=4096, ...}) = 0

open("/lib/i686/sse2/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/lib/i686/sse2", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/lib/i686/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/lib/i686", {st_mode=S_IFDIR|0755, st_size=4096, ...}) = 0

open("/lib/sse2/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/lib/sse2", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/lib/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/lib", {st_mode=S_IFDIR|0755, st_size=4096, ...}) = 0

open("/usr/lib/tls/i686/sse2/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/usr/lib/tls/i686/sse2", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/usr/lib/tls/i686/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/usr/lib/tls/i686", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/usr/lib/tls/sse2/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/usr/lib/tls/sse2", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/usr/lib/tls/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/usr/lib/tls", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/usr/lib/i686/sse2/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/usr/lib/i686/sse2", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/usr/lib/i686/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/usr/lib/i686", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/usr/lib/sse2/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/usr/lib/sse2", 0xffff9028) = -1 ENOENT (No such file or directory)

open("/usr/lib/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

stat64("/usr/lib", {st_mode=S_IFDIR|0755, st_size=4096, ...}) = 0

whereas the working one didn't call this at all.

I was also bemused as to why acroread had been installed on the server and more annoyingly why I couldn't uninstall,

Yep - someone (step up to the podium Jan Iven) had mispackaged

the SLC acroread 8.1.2 update...

rpm -qp ./acroread-8.1.2-1.slc4.i386.rpm --provides

warning: ./acroread-8.1.2-1.slc4.i386.rpm: V3 DSA signature: NOKEY, key ID 1d1e034b

2d.x3d

3difr.x3d

ADMPlugin.apl

Accessibility.api

AcroForm.api

Annots.api

DVA.api

DigSig.api

EFS.api

EScript.api

HLS.api

MakeAccessible.api

Multimedia.api

PDDom.api

PPKLite.api

ReadOutLoud.api

Real.mpp

SaveAsRTF.api

SearchFind.api

SendMail.api

Spelling.api

acroread-plugin = 8.1.2-1.slc4

checkers.api

drvOpenGL.x3d

drvSOFT.x3d

ewh.api

libACE.so

libACE.so(VERSION)

libACE.so.2.10

libACE.so.2.10(VERSION)

libAGM.so

libAGM.so(VERSION)

libAGM.so.4.16

libAGM.so.4.16(VERSION)

libAXE8SharedExpat.so

libAXE8SharedExpat.so

libAXE8SharedExpat.so(VERSION)

libAXSLE.so

libAXSLE.so

libAXSLE.so(VERSION)

libAXSLE.so(VERSION)

libAdobeXMP.so

libAdobeXMP.so

libAdobeXMP.so(VERSION)

libAdobeXMP.so(VERSION)

libBIB.so

libBIB.so(VERSION)

libBIB.so.1.2

libBIB.so.1.2(VERSION)

libBIBUtils.so

libBIBUtils.so(VERSION)

libBIBUtils.so.1.1

libBIBUtils.so.1.1(VERSION)

libCoolType.so

libCoolType.so(VERSION)

libCoolType.so.5.03

libCoolType.so.5.03(VERSION)

libJP2K.so

libJP2K.so

libJP2K.so(VERSION)

libResAccess.so

libResAccess.so(VERSION)

libResAccess.so.0.1

libWRServices.so

libWRServices.so(VERSION)

libWRServices.so.2.1

libadobelinguistic.so

libadobelinguistic.so

libadobelinguistic.so(VERSION)

libahclient.so

libahclient.so

libahclient.so(VERSION)

libcrypto.so.0.9.7

libcrypto.so.0.9.7

libcurl.so.3

libdatamatrixpmp.pmp

libextendscript.so

libextendscript.so

libgcc_s.so.1

libgcc_s.so.1(GCC_3.0)

libgcc_s.so.1(GCC_3.3)

libgcc_s.so.1(GCC_3.3.1)

libgcc_s.so.1(GCC_3.4)

libgcc_s.so.1(GCC_3.4.2)

libgcc_s.so.1(GCC_4.0.0)

libgcc_s.so.1(GLIBC_2.0)

libicudata.so.34

libicudata.so.34

libicui18n.so.34

libicuuc.so.34

libicuuc.so.34

libpdf417pmp.pmp

libqrcodepmp.pmp

librt3d.so

libsccore.so

libsccore.so

libssl.so.0.9.7

libssl.so.0.9.7

libstdc++.so.6

libstdc++.so.6(CXXABI_1.3)

libstdc++.so.6(CXXABI_1.3.1)

libstdc++.so.6(GLIBCXX_3.4)

libstdc++.so.6(GLIBCXX_3.4.1)

libstdc++.so.6(GLIBCXX_3.4.2)

libstdc++.so.6(GLIBCXX_3.4.3)

libstdc++.so.6(GLIBCXX_3.4.4)

libstdc++.so.6(GLIBCXX_3.4.5)

libstdc++.so.6(GLIBCXX_3.4.6)

libstdc++.so.6(GLIBCXX_3.4.7)

nppdf.so

prcr.x3d

tesselate.x3d

wwwlink.api

acroread = 8.1.2-1.slc4

Yep, thats right - RPM had decided that acroread was a dependency. Grr. Workaround - remirror the CERN SLC repo, (no they hadn't updated since), manually remove the offending rpm, and rebuild the metadata with 'createrepo'

Then make sure that the nodes were rebuilt and only ever looked to our local repository rather than the primary cern / dag ones. (thanks to kickstart %post and cfengine)

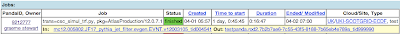

Finally we got yaim to *almost* run - it was failing on lcmaps and grid-mapfile creation (fixed by unsupporting a certain VO)

Easy fix in comparison. Anyway - DPM up n running and seems OK. Roll on the next SAM tests.... (or real users)

Phew.

Grid Middleware should not be this hard to install!